Define hate speech.*

Help AI professionals create algorithms that represent people affected by hate speech.

*Transparently

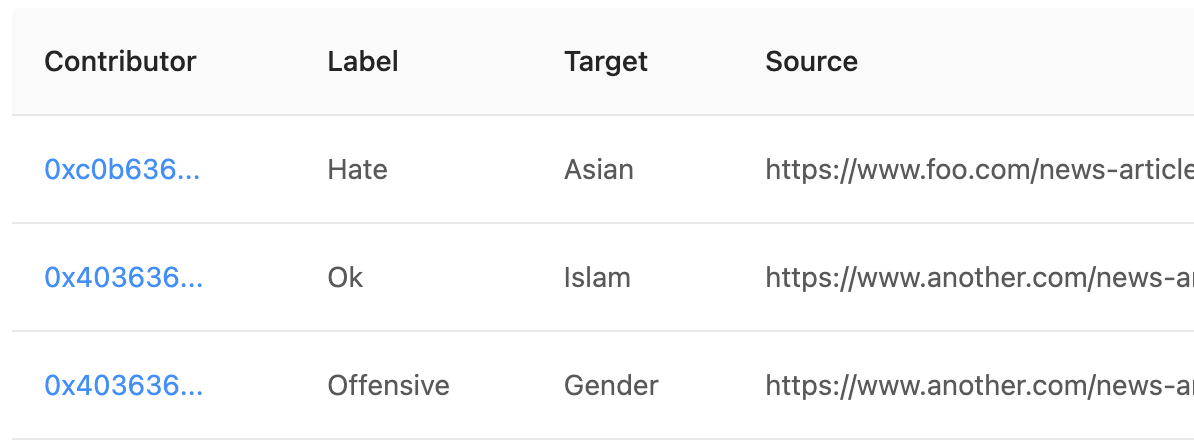

An open (anonymized) dataset lets anyone explore the labeled data underlying an AI decision.

*Authoritatively

Submissions and validations done by trusted members of targeted communities.

*Collaboratively

AI professionals working with targeted communities can make better algorithms.

*Verifiably

Cryptographic signatures protect data integrity while maintaining anonymity.

*Inclusively

Includes new and smaller communities with smaller data footprints.

*Continually

Hate speech is a moving target. Efficient contribution tools make it possible to keep up.

Tools For Communities

Add examples of hate speech quickly and anonymously from your unique viewpoint.

Label

Download our Chrome extension

Verify

Validate other's labels

Invite

Invite contributors you trust

Tools for AI Professionals

Use data that has been labeled by the members of the marginalized groups themselves.

Download

Incorporate our dataset into your models

Collaborate

Help other data professionals define hate

Algorithms with A Conscience

Billions of times per day, algorithms decide what information we should see – ads, posts, videos, movies, news stories and more. The information we see shapes our worldview, for better or worse. By providing an accurate and transparent dataset of hate speech, we seek to help AI researchers create algorithms that protect vulnerable groups against discrimination, prejudice and hateful acts of violence.

Optimize for ethical business goals

An ethical AI layer acts as an immune system to balance the business AI goal to ensure ethics is also considered.

Who is the arbiter of "hate speech"?

Platforms that attempt to limit hate speech often find themselves forced into subjective, nuanced and inflammatory judgment situations — often regarding worldviews and viewpoints they do not fully understand.

Definehate.org seeks to provide a curated, transparent and validated dataset, sourced from the marginalized communities themselves. AI researchers can make use of this dataset to train a fair and representative algorithm.

If a decision is questioned, open data is available to clarify how a decision was made, opening the door to productive discussion and mutual understanding.

Definitions Built on Trust.

To an AI, the "definition" of hate speech consists of an adequate number of correctly labeled examples. The quality and credibility of the examples affect the quality and credibility of the algorithm.

To create a trusted group of data labelers, each vulnerable community begins with one or more authorities whose identities are known, public and trusted. For example, a member of an employee resource group at a credible company, a member of an anti-defamation organization or a public activist. The community authorities invite trusted users who, in turn, invite other users. Each invited user's submissions are validated by others in their community. A high-trust user's validation carries more weight than a newly invited, low-trust member. As a new member's submissions are validated, their trust score increases. Uninformed or malicious users can have their influence removed entirely, including all past validations, allowing the dataset to be easily and completely purged of inaccurate data.

This organic trust system allows community leaders to cast a wide net and quickly recover from any accidental or deliberate acts of data corruption. This model also allows for organic expansion into other languages and vulnerable communities without gatekeepers and centralized bottlenecks.

Censorship and Freedom of Speech

Freedom of speech is the cornerstone of any free society. Heated debates, disagreements and unpopular opinions sometimes become the very seeds of productive change. This project is not intended to be political, nor is it a statement on censorship. It is an attempt to define hate speech from the perspective of those in vulnerable groups — people who face prejudice, malice, and violence stemming from hateful speech. How this dataset is used depends on the context. For example: An AI writing assistant like Grammarly may use it to inform a writer about potentially hateful content; content moderation teams may use it as a searchable reference when making difficult decisions; a child-safe content filter for young children may choose to remove a piece of content entirely. We hope that making this data freely available helps AI researchers and platform designers identify the seeds of hatred before they grow into unrest and violence, and provide tools that can be used to foster respect and understanding between people of different backgrounds, beliefs and identities.